Data Testing on Microsoft Fabric with HEDDA.IO

In this article, I will focus on the area of data integration, in particular on data delivery in the RAW Zone, as it is known in the Lakehouse context. This does not mean that data tests and data quality processes should be completed with this, but the use, at this point, is a first and important step on the way to valid data and processes.

.

To ensure that this data is valid and meets the requirements of the corresponding pipelines before starting the loading processes, a data testing framework should be established. This framework should verify the various data requirements, ensuring that errors within the loading processes are drastically minimized. Classic tests include checking for NULL values, reference or dimension data, temporal components, and more.

By implementing these data tests, organizations can significantly enhance the reliability and accuracy of their data integration efforts, leading to more efficient and error-free data loading processes.

Many checks can be quickly set up within notebooks, allowing for further steps to be controlled based on the results. However, more comprehensive tests, triggering events, or notifying users require a greater implementation effort. Additional documentation or traceability for data stewards or business units often necessitates special access to the data, which typical notebooks or Spark environments generally do not provide in terms of accessibility.

With HEDDA.IO, it is possible to easily and quickly build business rules for data validation, execute these rules before the actual integration processes, and continuously and transparently inform all stakeholders about the results. The new version of HEDDA.IO integrates additional features that facilitate the rapid and straightforward establishment of integrated data testing, especially in collaboration with Microsoft Fabric.

These enhancements in HEDDA.IO allow users to define and enforce business rules, ensuring data quality and compliance even before data enters the integration pipeline. The platform’s intuitive interface makes it easy for both technical and non-technical users to set up validation rules, reducing the need for extensive coding or configuration efforts.

Let’s take a closer look at the essential features that HEDDA.IO offers to establish comprehensive data testing:

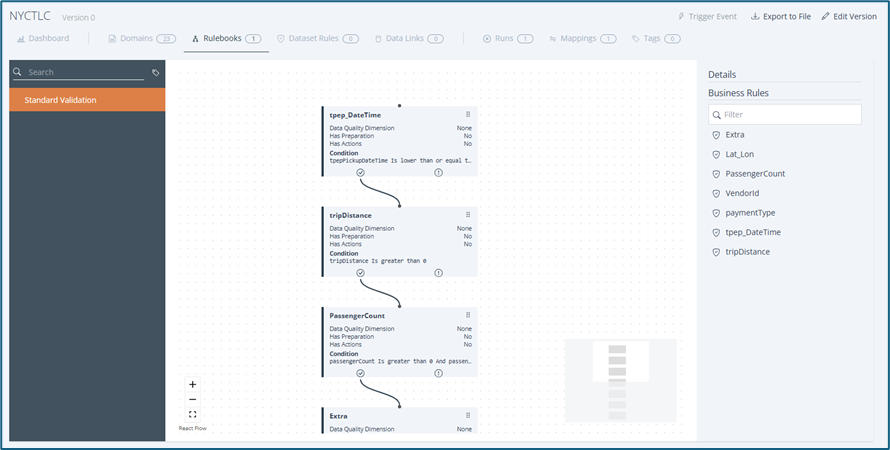

Business Rules

HEDDA.IO provides various business rules that can be directly applied to individual domains (columns) of a data frame. These rules allow for quick checks of domains for NULL values, setting value ranges for metrics such as revenue figures, specifying time frames for change dates, etc. Such rules ensure the fundamental quality of the data.

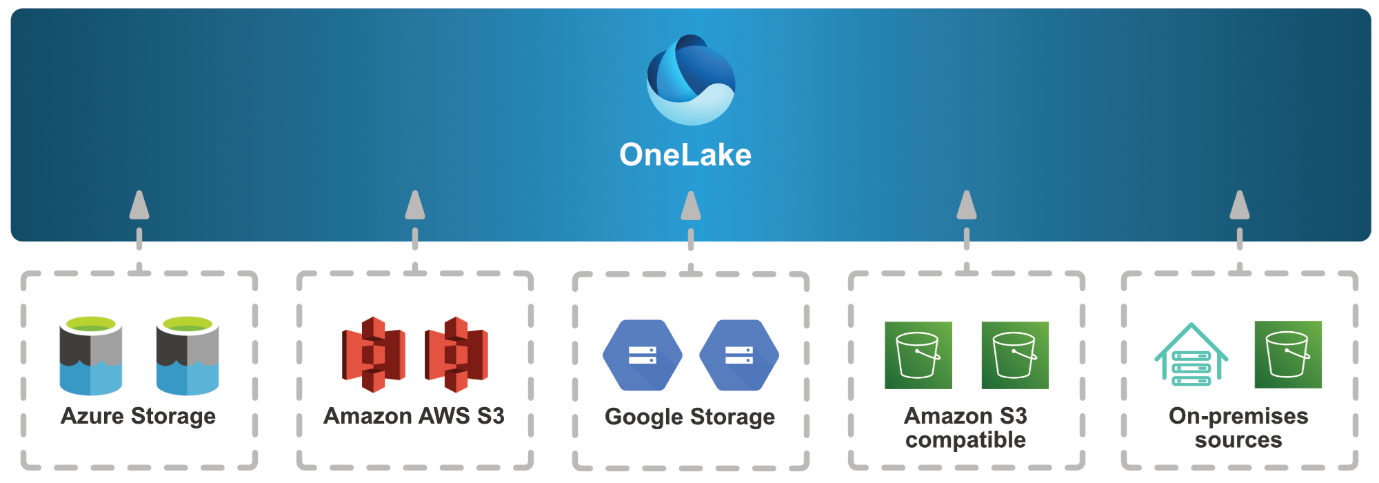

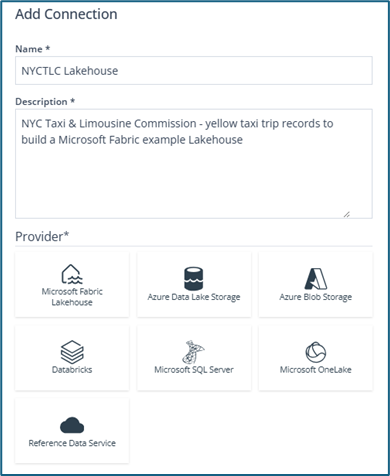

Data Links

Data Links enable verification of whether values in specific domains (columns) exist in external data sources. The new version integrates Data Links for both the Microsoft Fabric Lakehouse and OneLake, in addition to the already existing connections such as Azure Data Lake Storage, Databricks, and many more. This ensures that the data being loaded is indeed present in the master data, enhancing data integrity.

The same functionality can also be used later in the transformation process – typically in the Quality or Silver Zone – to enrich data with values from reference tables.

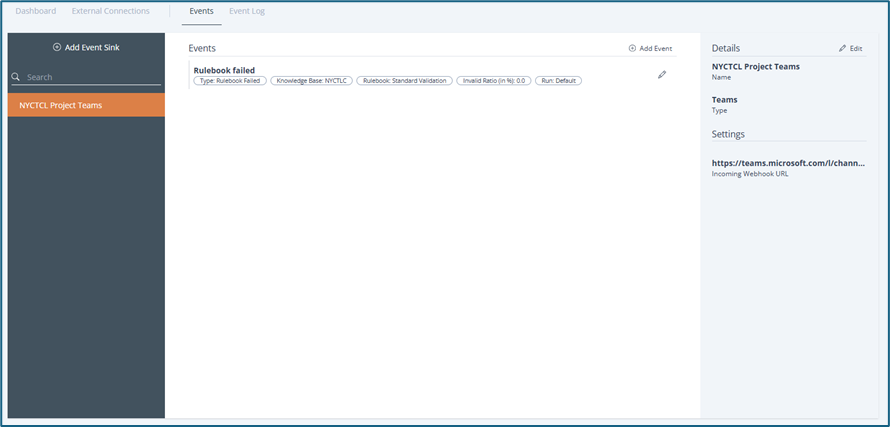

Events

Events allow users to respond to the results of data testing. HEDDA.IO can automatically send emails, post messages in Slack, Teams, or WebEx, or trigger additional data pipelines and other actions via WebHooks or Azure Event Grid based on the results.

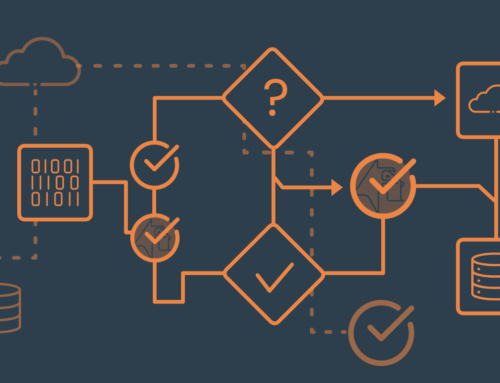

Integration

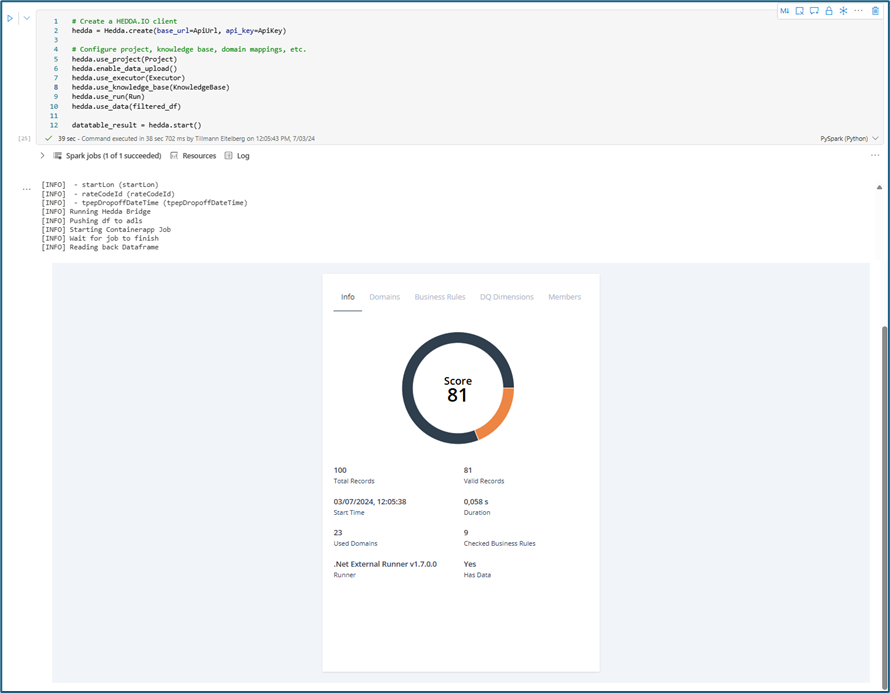

With HEDDA.IO pyRunner, HEDDA.IO can be integrated directly into a notebook within Microsoft Fabric with just a few lines of code. This allows existing processes to be quickly and easily extended by adding just one cell, and the tests can be executed before the respective processing. The results can be evaluated directly in the notebook, enabling subsequent workflows to process only valid data or to abort the process if necessary.

.

If you opt for a different Spark environment rather than Microsoft Fabric, you can utilize our pyRunner there as well. In this case, access to your Fabric Lakehouse via the Data Links is also guaranteed. In addition to the pyRunner for Spark environments, HEDDA.IO is also available as a .NET runner that you can use in VSCode Polyglot notebooks, in a container app job and many other .NET-capable solutions.

Reporting

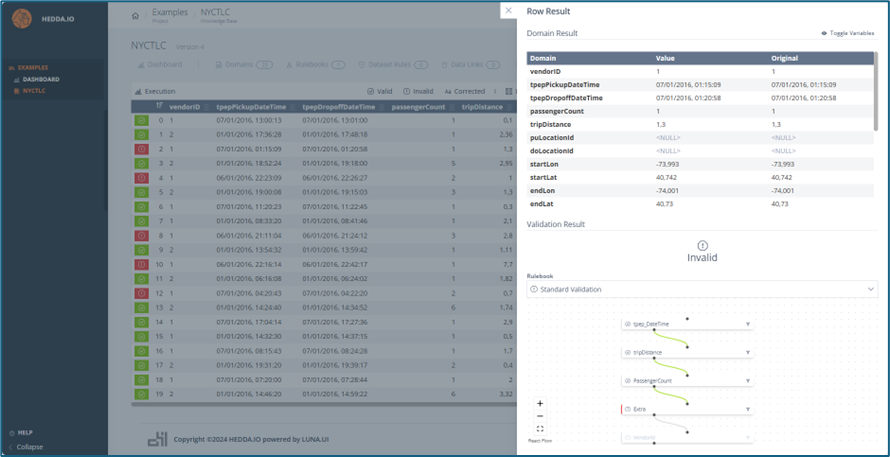

Each execution generates extensive statistics about the tested data, detailing all applied business rules. Users can directly review these statistics in the notebook using the HEDDA.IO widget (as you can see in the Integration section) or later through the HEDDA.IO UI for each execution. With the Analyze Data function, users can delve into the data and review each business rule and the entire rule flow. With the new Excel Add-in, users can also load processed data directly into Excel, either completely or filtered by valid or invalid data, to initiate further analyses and manual processes.

.

This is just one example among many possibilities and relevant process steps on how HEDDA.IO, in conjunction with Microsoft Fabric, can drastically improve data quality and, consequently, data integration processes.

If you have any further questions about HEDDA.IO, feel free to contact us for a live demo.

Tillmann Eitelberg

Tillmann Eitelberg is CEO and co-founder of oh22information services GmbH, which specializes in data management and data governance and offers its own cloud born data quality solution, HEDDA.IO.

Tillmann is a regular speaker at international conferences and an active blogger and podcaster at DECOMPOSE.IO.

He has open sourced several SSIS components and is Co-Author of Power BI for Dummies (German Edition). Since 2013 is Tillmann is awarded as Microsoft Data Platform MVP. He is a user group leader for the PASS Germany RG Rheinland (Cologne) and was a member of the Microsoft Azure Data Community Advisory Board.