FIND YOUR PERFECT DATA QUALITY PROCESS

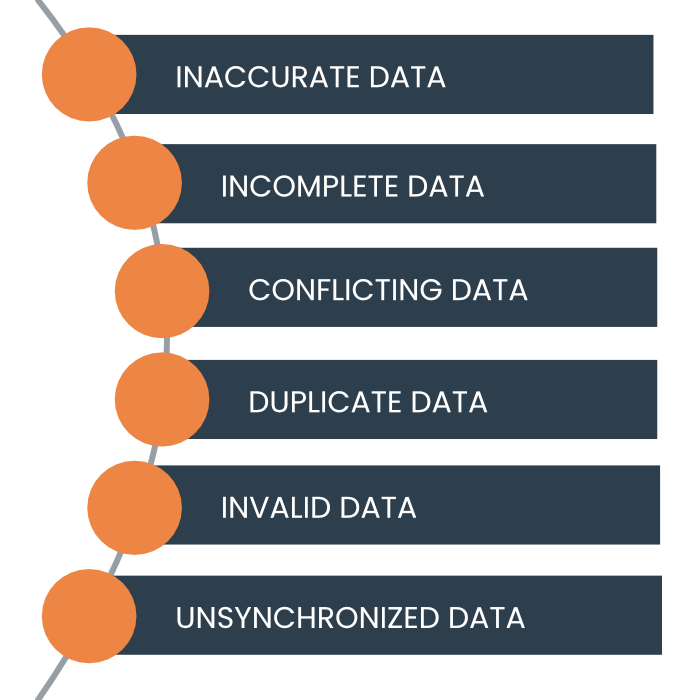

Good data quality is the backbone of successful organizations. Yet, bad data can derail operations, skew analysis, and undermine strategic decisions. The cost? IBM estimates $3 trillion annually, while Gartner cites an average of $12.9 million per organization. Beyond monetary loss, bad data can damage reputations, result in regulatory penalties, and squander buasiness opportunities.

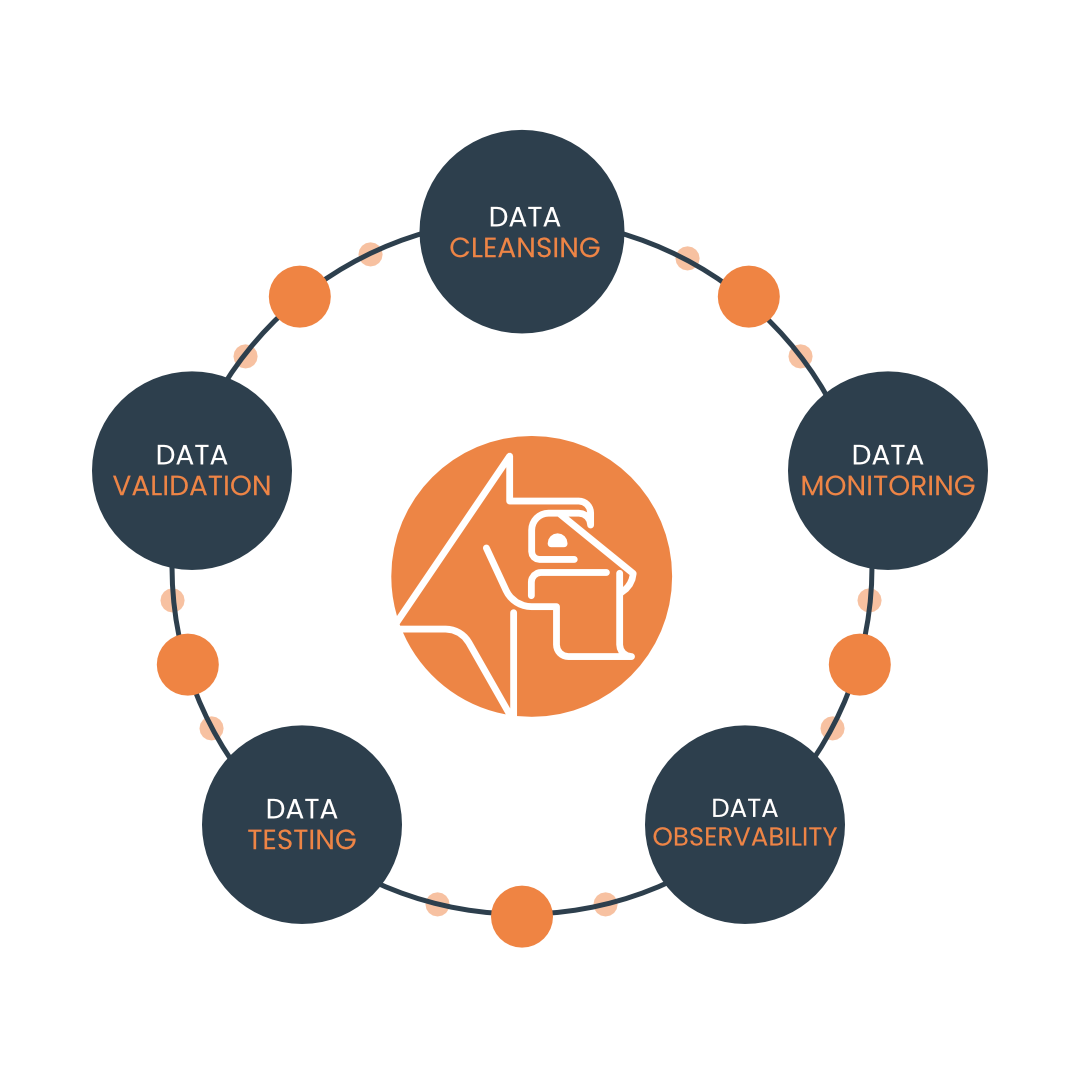

But what’s the solution? The ideal process must cover data validation, cleansing, monitoring, testing, and observability—key pillars ensuring data accuracy, completeness, and reliability.

With that in mind, is there a reliable Data Quality solution and why is it HEDDA.IO?

Data Validation

Data Validation is the actual basis for all steps within a Data Quality process. Effective Data Validation is based on:

- Accuracy: Ensures data values are correct and reflect real-world scenarios.

- Completeness: Identifies missing or null values critical to operations.

- Consistency: Confirms uniformity across datasets and avoids duplication.

- Compliance: Ensures adherence to business rules and data formats, such as valid dates or standardized IDs.

HEDDA.IO excels at Data Validation, enabling:

- Rule customization tailored to business needs, with instant previews.

- Granular reporting that pinpoints errors to specific lines of data.

- Integration into workflows to automate error handling and standardization.

Data Cleansing

High-quality data doesn’t happen by accident—it requires effective Data Cleansing, the process of identifying and correcting errors, inconsistencies, and inaccuracies in your data. So, what does data cleansing involve?

- Error Detection: Identifying inaccuracies, from typos to outdated values.

- Duplicate Removal: Eliminating redundant records to streamline data.

- Completeness: Filling in missing values or removing unusable records.

- Validation Integration: Confirming changes adhere to Business Rules.

HEDDA.IO elevates data cleansing to a new level by offering:

- Automated error detection with customizable rules.

- Seamless removal of duplicates and standardization of formats.

- Continuous monitoring to catch and fix emerging issues.

- Detailed auditing to track every correction, ensuring transparency.

Data Monitoring

In the dynamic world of Data Management, Data Monitoring is crucial to maintaining Data Quality. It ensures your data remains accurate, complete, and trustworthy over time, preventing costly errors and missed opportunities. Why is Data Monitoring essential?

- Real-Time Oversight: Continuously checks data as it flows through systems to detect issues immediately.

- Proactive Alerts: Notifies stakeholders of inconsistencies, anomalies, or breaches in predefined rules.

- Anomaly Detection: Identifies unexpected patterns, such as sudden spikes or missing values, that could signal problems.

- Trend Analysis: Tracks Data Quality over time, highlighting persistent issues or improvements.

HEDDA.IO transforms Data Monitoring into a proactive, automated process by offering:

- Source Monitoring: Evaluates data at its origin to ensure it meets quality standards before entering your pipeline.

- Automated Alerts: Sends notifications when thresholds or rules are violated, enabling immediate action.

- Custom Metrics: Tracks accuracy, timeliness, completeness, and more, tailored to your business needs.

- DevOps Integration: Embeds monitoring into pipelines for seamless, continuous checks during development and operation.

- Detailed Lineage: Provides insights into how and when data changes occur, ensuring transparency.

Data Testing

Data Quality doesn’t stop at collection—it requires thorough data testing to ensure it meets the highest standards. Testing verifies that data is accurate, consistent, and reliable before it’s used in critical business processes. Without it, errors can cascade through systems, leading to flawed insights and costly decisions. What makes data testing indispensable?

- Accuracy Validation: Ensures data values reflect real-world scenarios.

- Workflow Assurance: Verifies data processing steps like ETL are functioning correctly.

- Integration Checks: Confirms that data from multiple sources combines seamlessly.

- Performance Validation: Assesses system performance under various conditions to prevent bottlenecks or failures.

- Regression Testing: Ensures changes in pipelines or rules don’t introduce new issues.

HEDDA.IO takes data testing to the next level with:

- Automated Testing: Run tests directly in DevOps pipelines for real-time quality checks.

- Customizable Rules: Test against business-specific standards with immediate feedback on changes.

- Granular Reporting: Pinpoint exact data rows with issues, enabling precise fixes.

- Scenario Simulation: Preview the impact of rule or source changes on your data.

- Historical Comparisons: Track changes over time to understand trends and impacts.

Data Observability

Observability provides deep insights into the health, flow, and transformations of your data, ensuring issues are detected and resolved before they impact your business. Why prioritize Data Observability?

- Real-Time Insights: Continuously monitor data pipelines for bottlenecks, inconsistencies, and failures.

- Transparency: Understand data lineage to track how data evolves through your systems.

- Proactive Resolution: Identify issues early and notify teams to take immediate action.

- Impact Analysis: Assess how changes to pipelines or sources affect downstream data.

HEDDA.IO delivers unmatched data observability by offering:

- Continuous Monitoring: Track key indicators like accuracy, timeliness, and consistency across your entire data pipeline.

- Automated Alerts: Notify stakeholders of anomalies or threshold breaches in real time.

- Data Health Dashboards: Visualize trends in data quality and pinpoint areas for improvement.

- Seamless Integration: Embed observability into existing pipelines and systems without disruption.

Conclusion

The risk of bad data is not just a possibility—it’s a reality. Organizations must recognize that it’s less about avoiding bad data entirely and more about being prepared to handle it effectively. This means having robust processes in place to monitor, validate, and cleanse data in real time, ensuring you can quickly detect and respond to changes before they disrupt operations.

Waiting to act after the damage is done, much like implementing a backup after data loss, is simply not an option. A successful data strategy begins with providing high-quality data—data that is reliable, accurate, and fit for purpose. With a solution like HEDDA.IO, you gain the tools to empower all endpoints to validate and cleanse data at its source, seamlessly integrate new functions into existing workflows, and adapt future processes with ease thanks to high-performance runners and open interfaces.

Data validation and quality assurance are not one-off tasks—they must be integrated into every loading process or implemented as stand-alone analysis mechanisms. HEDDA.IO’s ability to enforce complex business rules without disrupting workflows ensures your organization can maintain high standards without compromising efficiency.

Ready to take control of your Data Quality? Contact us today or request a test account to experience how HEDDA.IO can transform your data strategy.