As data platforms become more dynamic, distributed, and business-critical, Data Testing has become a key discipline for ensuring data reliability, trust, and regulatory compliance.

Whether you’re building data pipelines, enriching source data, or applying advanced analytics – you need confidence that the rules applied to your data are correct, consistent, and version-controlled.

That’s where HEDDA.IO steps in.

What Is Data Testing – and Why Does It Matter?

Data Testing refers to the automated validation of data based on formalized business logic, rules, or patterns.

The goal is not just to check schema conformity or completeness, but to verify that the data makes sense in your specific business context.

Typical test scenarios include:

- Checking data quality across ingestion layers

- Validating transformations in pipelines

- Comparing source vs. target data

- Monitoring data rule violations over time

- Creating audit trails for regulatory reporting

And most importantly: you want to test before data goes live.

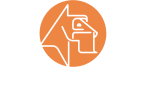

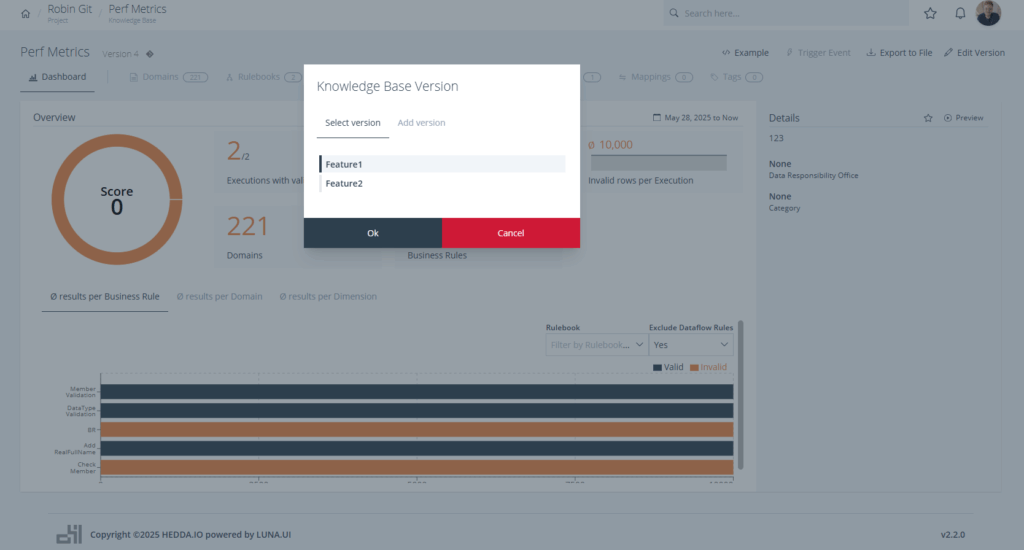

Versioned Business Rules in HEDDA.IO

A key component of scalable data testing is rule versioning – and HEDDA.IO takes this to the next level.

Instead of hard-coding Business Rules into notebooks or transformation logic, HEDDA.IO manages rules in centralized Knowledge Bases – and starting with Version 2.0, these Knowledge Bases are Git-integrated and fully version-controlled.

Each Knowledge Base can have multiple Named Edit Versions, which allows you to:

- Develop multiple features or rule changes in parallel

- Assign dedicated rule versions to environments (Dev, QA, Prod)

- Track changes with full Git commit history

- Collaborate across teams without breaking the mainline

This versioning approach brings the same rigor to data logic that developers have long used for code. And it integrates perfectly into CI/CD flows.

Testing Named Versions from Databricks or Microsoft Fabric

The real magic happens when you can trigger these versioned data tests directly from your data platform – and that’s exactly what the HEDDA.IO PyRunner enables.

Using a simple configuration, you can run validations against specific named rule versions directly from:

- Databricks Notebooks (PySpark or Python)

- Microsoft Fabric Notebooks

- Any PySpark-compatible runtime

- .NET Interactive Notebooks

- Newly introduced WebRunner

- Single Row Processor for Streaming Data

- Integrated Preview Runner

Here’s how it works:

%pip install <URL_TO_PYRUNNER> pyheddaiofrom pyheddaio.workflow import HeddaApiUrl = "https://demo.hedda.io"ApiKey = "<Your API Key>"df = <dataFrame>version = "<Knowledge Base Version>"# Create a HEDDA.IO clienthedda = Hedda.create(base_url=ApiUrl, api_key=ApiKey)# Configure project, knowledge base, domain mappings, etc.hedda.use_project("Demo Project")hedda.use_knowledge_base("Demo Knowledge Base", version)hedda.use_run("Default")# Pass data to the clienthedda.use_data(df)# Use this run to store the results inexecution = hedda.start()

Because this uses Named Versions, you can test unreleased rule logic safely and repeatedly – all without deploying it to production.

This gives your notebooks the power to:

- Validate in development using staging rule branches

- Compare behaviour across rule versions

- Integrate testing into scheduled jobs and CI/CD flows

- Ensure business logic is tested, versioned, and reproducible

Summary

With HEDDA.IO 2.0, data testing is no longer a scattered task hidden in transformation scripts.

It becomes a first-class citizen in your architecture:

- Rules are version-controlled via Git

- Testing can happen on any version, in any environment

- Execution integrates natively with Databricks, Microsoft Fabric, or any modern PySpark platform

And you finally get repeatable, traceable, and automated validation at scale.