Why Run-based Validation in HEDDA.IO Solves One of the Hardest Problems in Enterprise Data

In many organizations, especially those operating internationally or across business units, data isn’t just spread across systems — it comes in different shapes, structures, and languages.

CRM systems differ by country. ERP instances evolve independently. Even the concept of a “customer” can vary subtly from region to region.

But business decisions, reports, and models still rely on one assumption:

Data can be compared, analyzed, and trusted as a whole.

This is where Data Quality gets hard. Not because the values are wrong — but because the context is different.

A Real-World Challenge: Global CRM, Local Differences

Consider a company with sales teams in Germany, France, and Italy. Each region has its own CRM system:

- Germany uses Microsoft Dynamics

- France runs Salesforce

- Italy relies on a legacy in-house system

Each CRM stores customer records, but the field names, formats, and business logic differ:

- Germany stores Customer_ID, France uses client_id, Italy has CodiceCliente

- Some systems use email, others adresse_email

- Country codes may use ISO-2, ISO-3, or national codes

- Legal requirements around VAT, privacy, or contact info vary by market

Yet all three systems feed into a central data lake.

And at group level, one question keeps coming up:

“Why is the quality of our customer data so inconsistent?”

Why a One-Size-Fits-All Rulebook Won’t Work

To solve this, many organizations try to define single rules — a golden list of quality expectations. But here’s the problem:

- A rule that’s valid in Germany (e.g., “VAT ID must be set”) may not apply in Italy

- A mandatory field in one system may not even exist in another

- Different teams may structure the same concept differently

This leads to constant exceptions, overrides, and eventually: rule fatigue.

What’s needed is a model that allows shared logic where possible, and localized logic where necessary — without duplicating validation infrastructure.

How HEDDA.IO Approaches the Problem

HEDDA.IO is built around the idea that validation logic should adapt to data — not the other way around. To support cross-system, cross-region validation at scale, it introduces the concept of Runs.

What is a Run?

In HEDDA.IO, a Run is not just an execution – it’s a context. A Run defines:

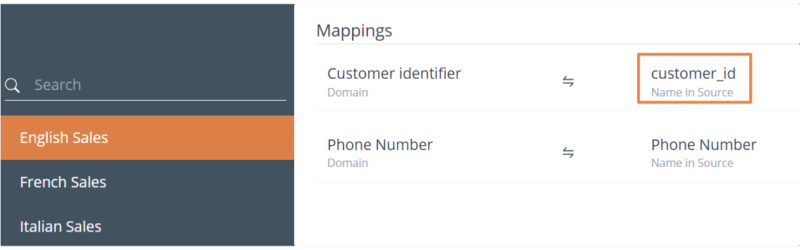

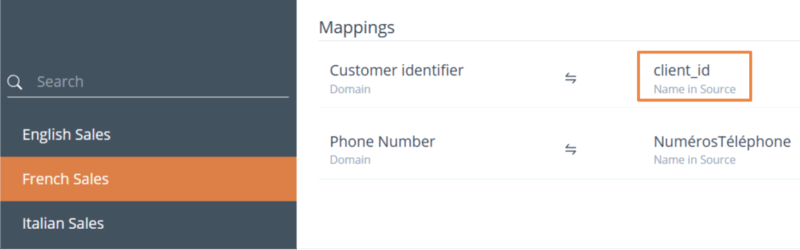

- How incoming fields are mapped to standard business concepts (called Domains)

- Which rules and Rulebooks are applied to those domains

This structure lets you validate the same knowledge base against multiple, heterogeneous sources — each on its own terms.

The Power of Mappings and Tags

Each Run can define its own field-to-domain mapping. So whether the source field is customer_id, client_id, or CodiceCliente — HEDDA.IO knows:

→ This is the Customer Identifier. Validate it accordingly.

Beyond structure, HEDDA.IO uses Tags to select applicable RuleBooks. For example:

- A RuleBook tagged with country:de will only apply to Runs tagged country:de

- Shared logic (like “email must be valid”) lives in a shared Rulebook used by all

- France-specific rules (e.g., “email domain must be .fr for local customers”) apply only when needed

This avoids messy duplication and enables both reuse and granular control.

What This Means in Practice

Instead of maintaining multiple parallel rule sets, teams can:

- Define standard validations once, and extend where needed

- Run validations on any source system — regardless of naming conventions

- Quickly onboard new countries or systems by simply configuring a new Run

- Trace which data failed which rules, where, and why

This also means that business users in each region can own and review their specific rules — without affecting others.

Validation becomes both federated and governed.

Why It Matters

Without such a structure, global data quality initiatives fall into one of two traps:

- Over-centralization – where rules are rigid, generic, and mostly ignored

- Over-fragmentation – where each region builds its own quality logic, and nothing aligns

HEDDA.IO offers a third way: Central intelligence with local autonomy.

It lets organizations scale validation without losing control, and improve quality without disrupting local realities.

Final Thoughts

When data comes from different systems, languages, and structures, enforcing “quality” isn’t about fixing values — it’s about understanding context.

HEDDA.IO’s Run architecture turns that context into a manageable asset:

So that every validation, in every region, respects both global rules and local logic.

Want to learn how to bring scalable validation to your fragmented data landscape — without more complexity?